Congratulations on making it through the first month of 2022! As we prepare to enter the second month of the year, let’s take a few moments to catch up on a few news items in the privacy world.

A Flurry of State Data Privacy Bills

State legislators wasted no time introducing the latest round of data privacy bills at the start of the legislative year. Some states are reviving previously introduced bills with the hopes of pushing them through in the new session, while other states are finally joining the bandwagon and introducing comprehensive data privacy laws for the first time since the rush for state data privacy laws began several years ago.

Out of all the states introducing bills this legislative session, all eyes are on LDH’s home state, Washington State. The Washington Privacy Act, which failed to pass multiple times in previous legislative years, is back. However, there are currently two other competing comprehensive data privacy bills. The first bill, the People’s Privacy Act, deviates from WPA in several key places, including stricter requirements around data collection and processing (e.g., requiring covered entities to obtain opt-in consent for processing personal data), biometric data handling, and a private right of action. The second bill, the Washington Foundational Data Privacy Act, is a new bill that brings the idea of creating a new governmental commission, something that the two other bills lack. Each bill has its strengths and weaknesses concerning data privacy. Nevertheless, if Washington manages to pass one of these bills – or a completely different bill that is still yet to be introduced – the passed data privacy bill will influence other states’ efforts in passing their privacy bills.

FLoC Flew Away

Rejoice, for FLoC is no more! We previously covered Google’s attempt to replace cookies and the many privacy issues with this attempt. The pushback from the public and organizations has finally led Google to rethink its approach. It also didn’t help that major web browsers, which were supposed to play a critical role in FLoC, refused to play along.

Google didn’t completely abandon the effort to replace cookies, nevertheless. Google announced a new proposal, Topics, as an attempt to create a less privacy-invasive alternative to cookies. It’s still early to tell if this FLoC alternative is truly any better than FLoC, but initial reports seem to suggest that the Topics API is an improvement. However, we did notice that some of these reports mention that users would be primarily responsible for understanding and choosing the level of tracking in browser settings. Ultimately, we are still dealing with businesses pushing tracking user activity by default.

Smart Assistants Have Long Memories

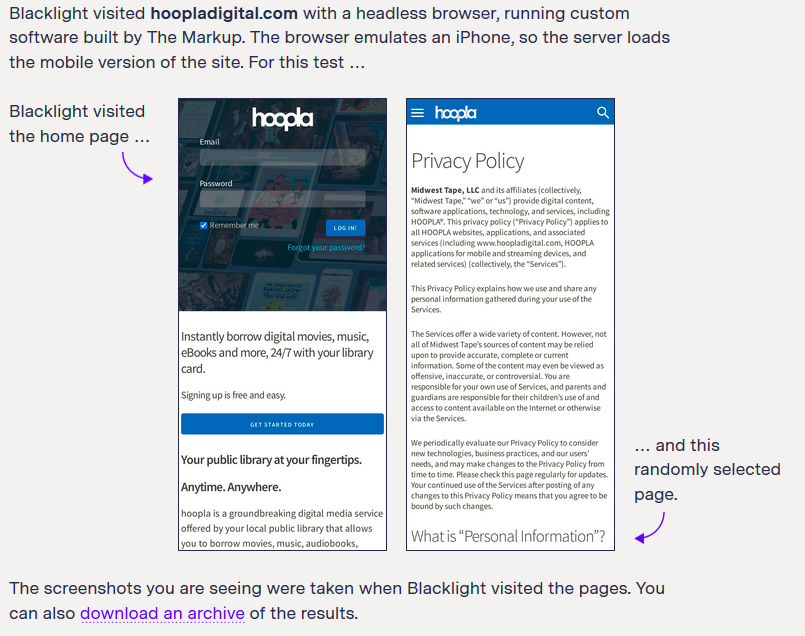

Have you requested a copy of your personal data yet? Even if you are not a resident of the EU or California, you can still request a copy of your personal data from many major businesses and organizations. This includes library vendors! Requesting a copy of your data from a company can highlight how easy it is for a company to track your use of its services. A good library-related example is OverDrive’s tracking of patron borrowing history, even though users might assume that their borrowing history isn’t being recorded after flipping a toggle to “hide” their history in user settings.

The latest example of extensive user tracking comes from a Twitter thread of a person going through the data Amazon has collected about her throughout the years, including all the times she interacted with Amazon Alexia. We’re not surprised about the level of data collection from Amazon – the tracking of page flips, notes, and other Kindle activity by Amazon has been a point of contention around library privacy for years. Instead, this is a reminder for libraries who are currently using or planning to use smart speakers and smart assistants to provide patron services that Amazon (and other companies) will collect and store patron data generated by their use of these services by default. This is also a good reminder that your smart speaker in your work or home office is also listening in on your conversations, including conversations around patron data that is supposed to remain private and confidential.

If you have a smart speaker (or other smart-enabled devices with a microphone) at your library or in your home office, you might want to reconsider. The companies behind these products are not bound to the same level of privacy and confidentiality as libraries in protecting patron data. Request a copy of data collected by the company behind that smart speaker sitting in the library. How much of that data could be tied back to data about patrons? How much do your patrons know about the collection, use, and sharing of data by the company behind the smart speaker at the library? What can your library do to better protect patron privacy around the smart speaker? Chances are, you might end up relocating that smart speaker from the top of the desk to the bottom of a desk drawer.