Keeping track of the latest threats to patron data privacy and safety is easily a full-time job in quiet, uneventful times. Last week was neither quiet nor uneventful. From the possibility of increased cyber warfare in the coming weeks to the progression of anti-LGBTQIA+ and anti-CRT regulations in several US states, many library workers are rightfully feeling overwhelmed with the possible implications of these events on the patron’s right to privacy in the library. And all of this is happening while we are still in the middle of a pandemic!

This week we are going to help you, the reader, to take a moment to stop, breath, and orient yourself in light of the recent increase in threats to patron privacy. We have three things that you can do today that can get you started in protecting patron data privacy and security in light of recent events:

Reacquaint yourself and others on how to avoid phishing attempts – Libraries are no strangers in being the target of phishing attacks; however, with the possibility of increased cyber warfare, the phishing attempts will only increase. As we saw with Silent Librarian, phishers are not afraid to use the library as a point of entry into the more extensive organizational network to access sensitive personal information. The Phishing section of the Digital Basics Privacy Field Guide is an excellent way to spread awareness at your library if you are looking for a simple explainer to share with others.

(Bonus – turn on multi-factor authentication wherever possible! You can also include the Multi-Factor Authentication section from the Digital Basics Guide while talking to others in the library about MFA.)

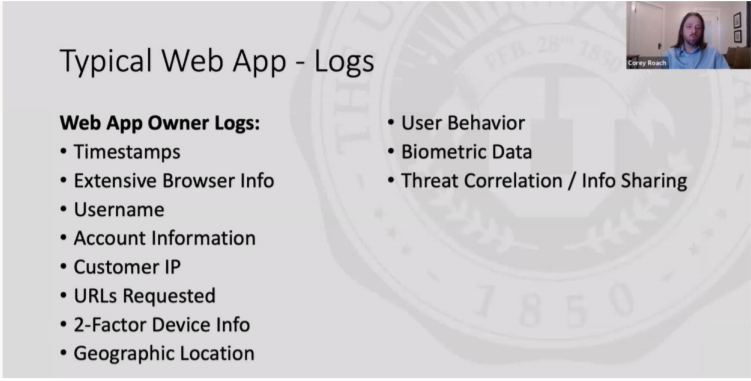

Check if your library is holding onto circulation, reference chat, and search histories – By default, your ILS should not be collecting borrowing history, but the applications you use for reference services might have similar information. The same goes for your library’s catalog or discovery layer and logs that might be capturing searches from patrons in a system log. This data can be used to harm patrons, particularly patrons who experience greater harms when their privacy is violated, such as LGBTQIA+ students and minors. Check the system and application settings to ensure that your systems are not collecting circulation and search histories by default. Review the reference chat logs to ensure that personal patron data is not being tracked or retained in the metadata and the chat content.

(Bonus – If you find patron data that is not supposed to be there after checking and changing settings, make sure to delete it securely!)

Check your backups – You should be checking your backups regularly, but today is a good day to do an extra round of checks on your data backups:

- Can you restore the system with the latest backup in case of a ransomware or malware attack? If you haven’t already tested your backups, you might run into unexpected issues in your attempt to restore your system after an attack. Schedule a backup test sooner than later if you haven’t restored from a data backup before to catch these issues while the system is still up and functional.

- Where are your backups located? Having an offline copy can mitigate the risk of loss or destruction of all copies from an attack. You also want to ensure that the backup is securely stored separately from the system or application.

- What data is being stored in the backups? Backups are subject to the same risk as other data regarding unauthorized access or government requests. This is especially important when these backups have personal data, such as a patron’s use of library resources and services. Adjust what data is being backed up daily to limit capture of such patron data and limit the number and frequency of full database backups.

- How long are you storing backups? Backups can be used to reconstruct a patron’s use of library resources and services over time. We have to balance the utility of backups and data security and privacy; however, the longer you keep a backup, the less valuable it will be in restoring a system and the more the risk of that data being breached or leaked. The length of time you should retain a backup copy will depend on several factors, including if the backups are incremental or full and what type of data is stored in the backup. Nevertheless, if you are unsure where to start, review any backups older than 60 days for possible deletion.

(Bonus – if you’re not backing up your data, now would be a perfect time to start!)

Focusing on these three actions today will provide your library with an action plan to address the increased risks to patron data privacy and security in the coming weeks and months (and even years). Even though we focused on things you can do right now, don’t forget to include in your action plan how you will work with third parties (such as vendors) in addressing the collection, retention, and sharing of patron data! And as always, we will keep you up to date on the latest news and events impacting patron data privacy and security, so make sure you subscribe to our weekly newsletter to get the latest news delivered to your inbox.